Publications

2024:

A. Roy, R. Moulick, V. K. Verma, S. Ghosh, A. Das; Computer Vision and Pattern Recognition (CVPR), 2024

[Project] [Code] [Poster]

(Best Paper Honorable Mention - Research Track)

S. K. Perepu, K. Dey, A. Das; ACM Joint International Conference on Data Science & Management of Data (CODS-COMAD), 2024

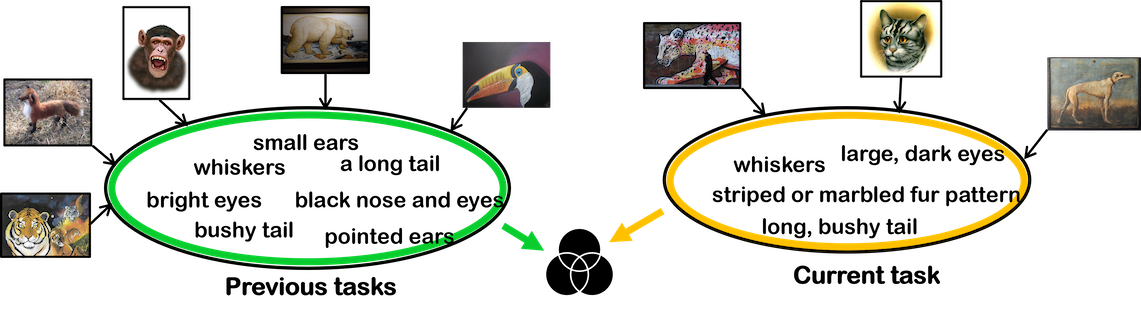

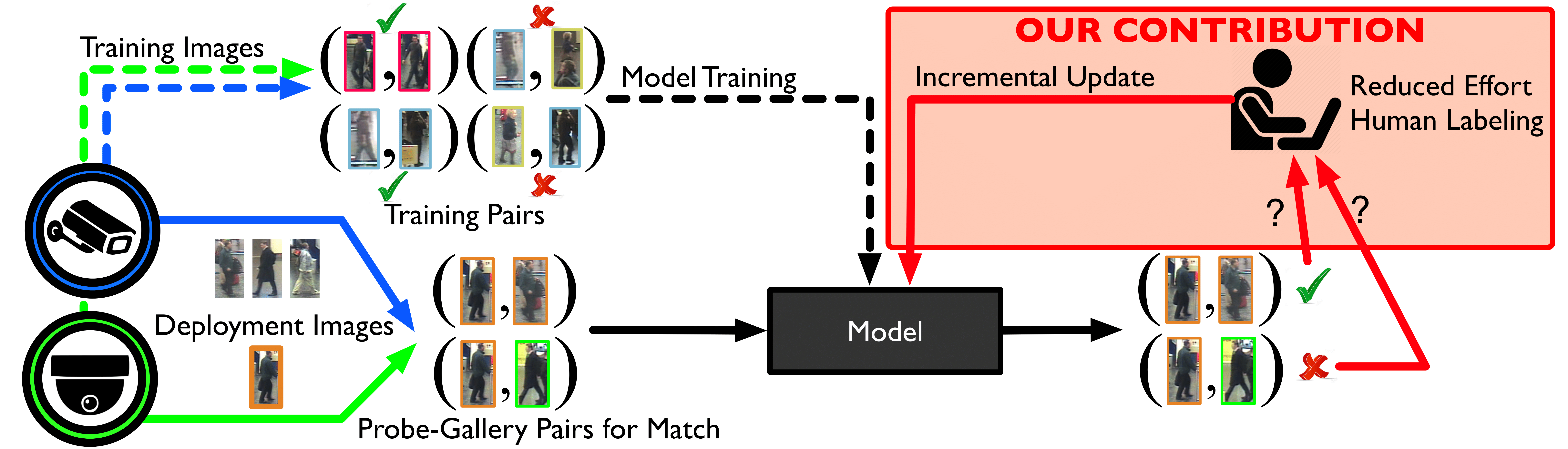

A. Roy, V. K. Verma, S. Voonna, K. Ghosh, S. Ghosh, A. Das; International Conference on Computer Vision (ICCV), 2023

[Project] [Code]

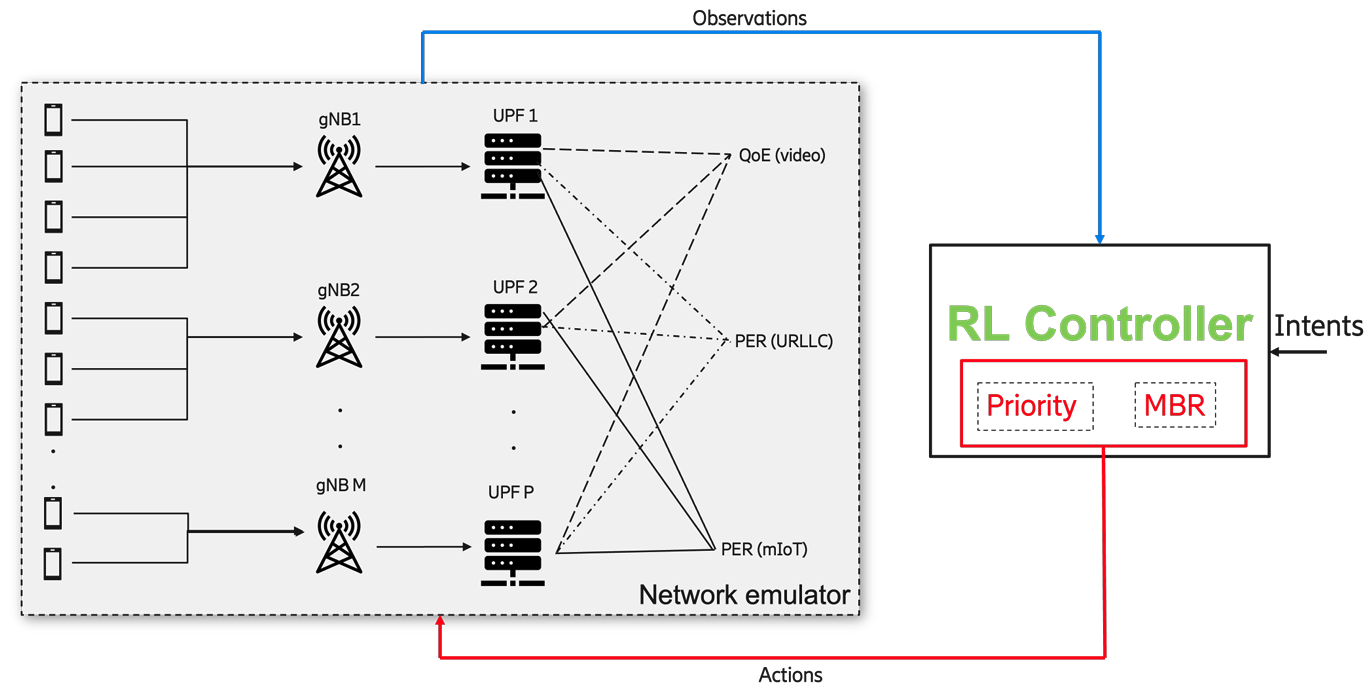

O. Chakraborty, A. Sahoo, R. Panda, A. Das; International Conference on Learning Representations (ICLR), 2023

[Project] [Code]

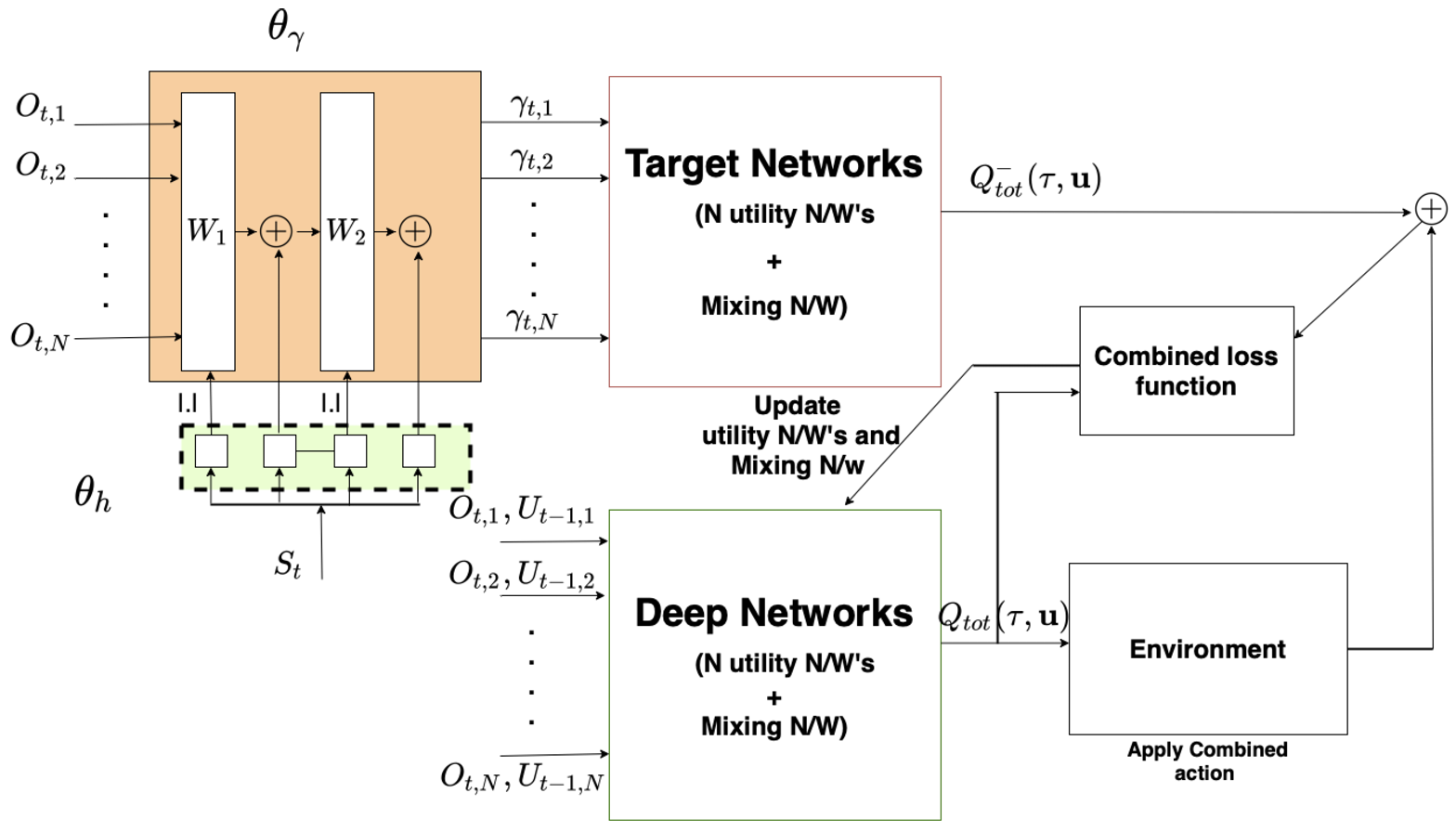

K. Dey, S. K. Perepu, P. Dasgupta, A. Das; IEEE NetSoft, 2023

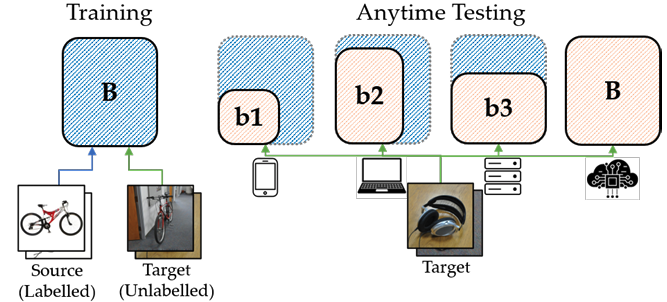

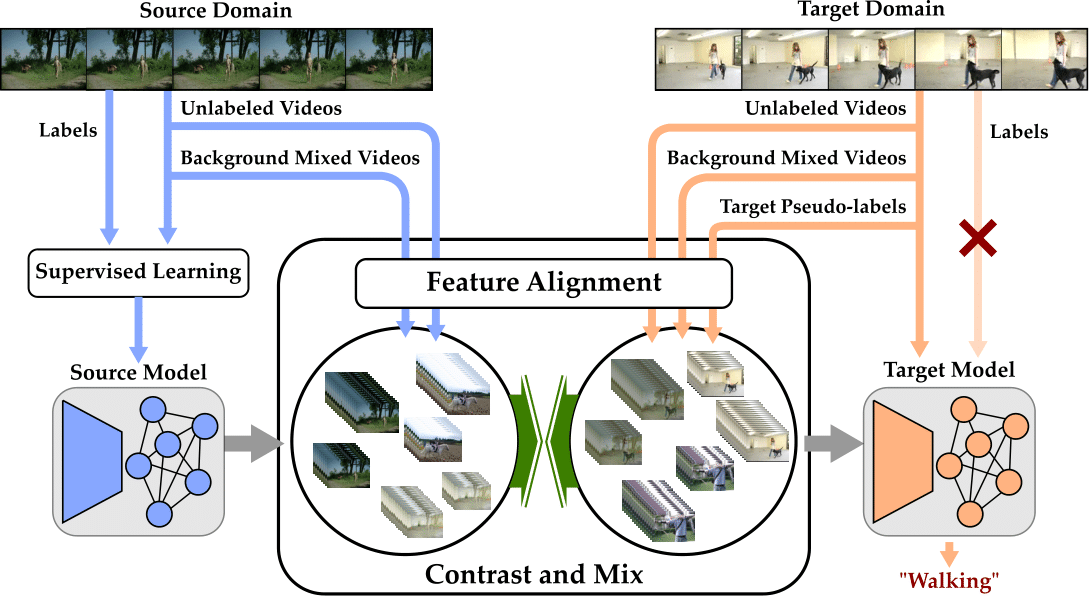

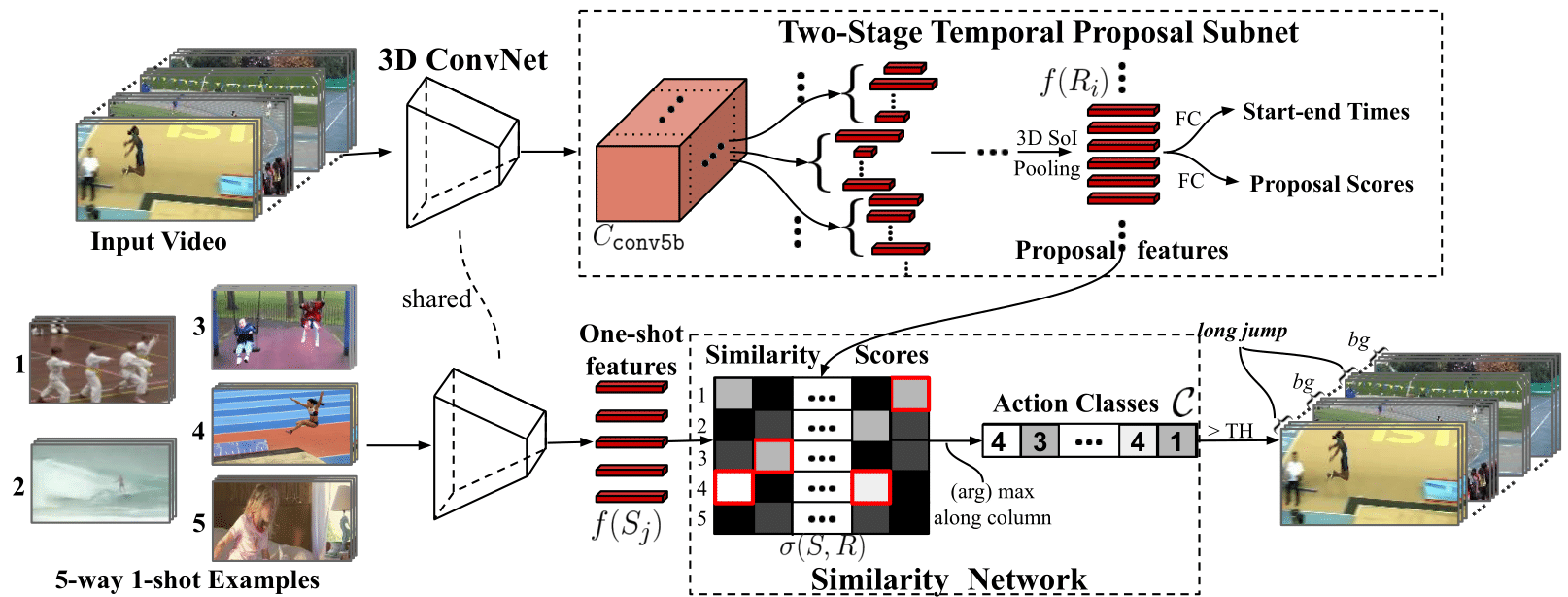

A. Sahoo, R. Panda, R. Feris, K. Saenko, A. Das; Winter Conference on Applications of Computer Vision, 2023.

[Project] [Code] [Poster] [Video presentation] [Slides]

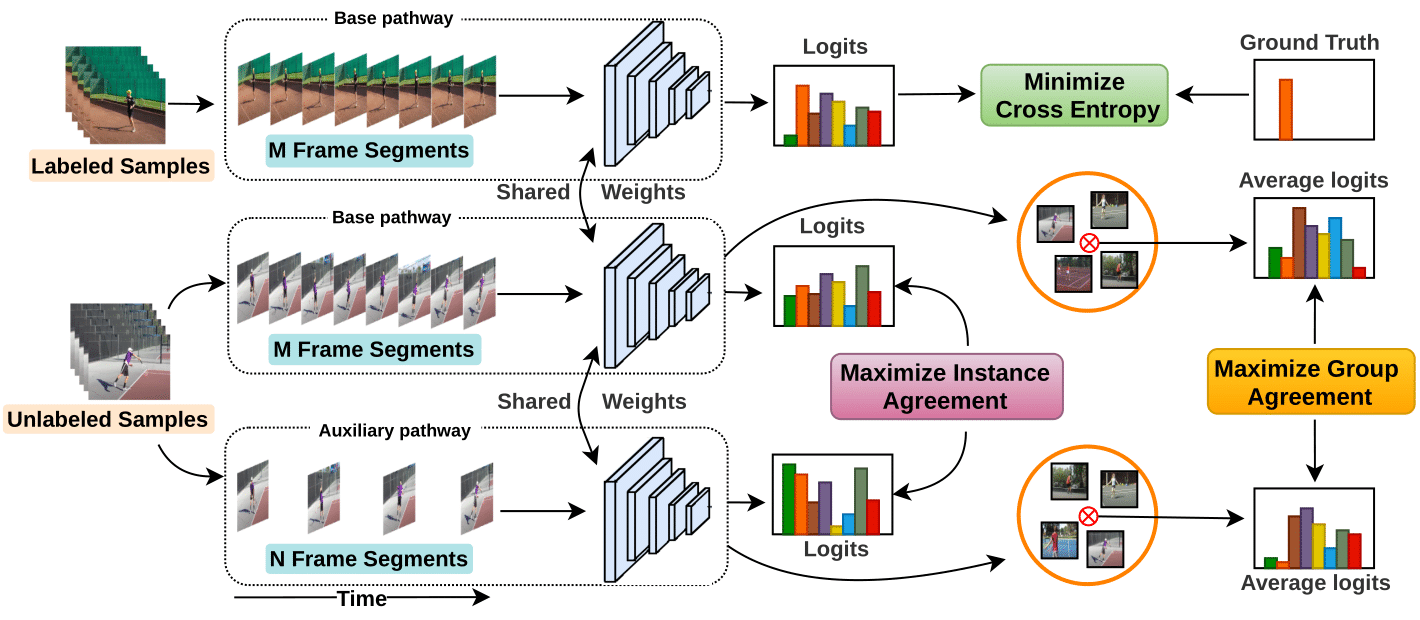

A. Sahoo, R. Shah, R. Panda, K. Saenko, A. Das; Neural Information Processing Systems, 2021.

[Project] [Code]

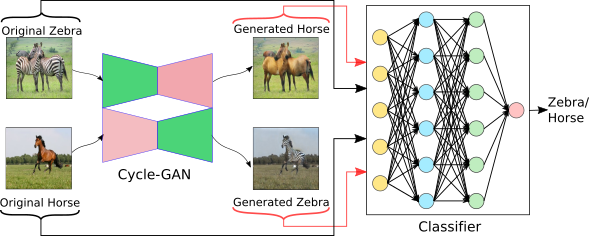

A. Singh, O. Chakraborty, A. Varshney, R. Panda, R. Feris, K. Saenko, A. Das; Computer Vision and Pattern Recognition (CVPR), 2021.

[Project] [Code] [Poster] [video presentation]

A. Sahoo, A. Singh, R. Panda, R. Feris, A. Das; ECCV workshop on Imbalance Problems in Computer Vision (IPCV), 2020.

[Code] [Virtual Presentation]

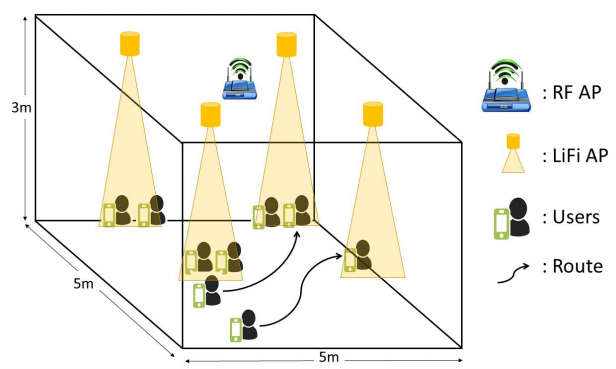

R. Ahmad, M. D. Soltani, M. Safari, A. Srivastava, A. Das; IEEE Access, 2020.

H. Xu, X. Sun, E. Tzeng, A. Das, K. Saenko, T. Darrell; IEEE CVPR workshop on Visual Learning with Limited Labels, 2020.

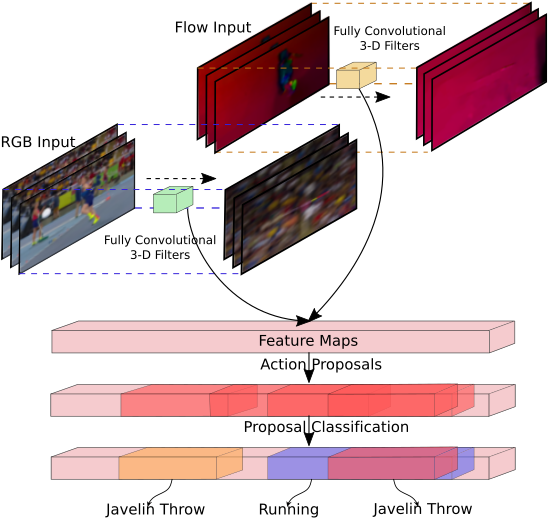

H. Xu, A. Das, K. Saenko; IEEE Trans. on Pattern Analysis and Machine Intelligence, 2019.

V. Petsiuk, A. Das, K. Saenko; British Machine Vision Conference, 2018 (Oral)

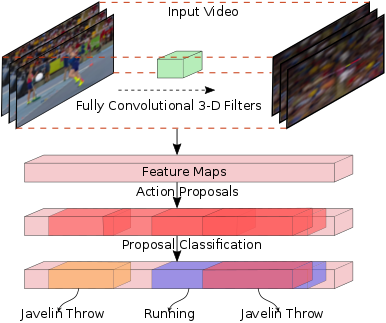

H. Xu, A. Das, K. Saenko; International Conference on Computer Vision, 2017

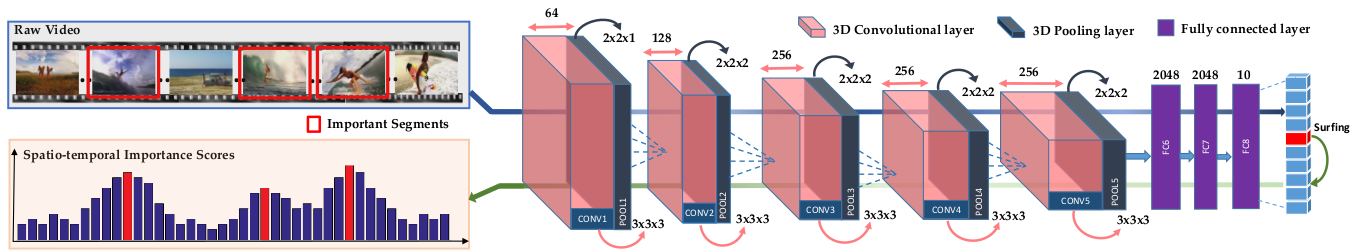

R. Panda, A. Das, Z. Wu, J. Ernst, A. Roy-Chowdhury; International Conference on Computer Vision, 2017

[Supplementary]

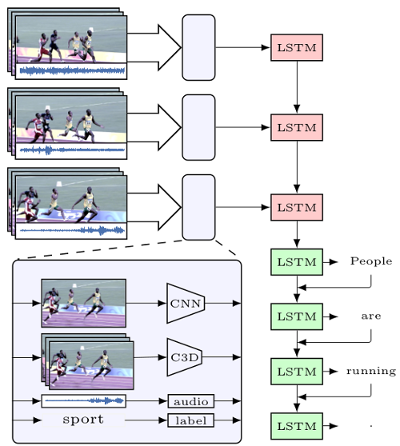

V. Ramanishka, A. Das, J. Zhang, K. Saenko; Computer Vision and Pattern Recognition, 2017.

[Project] [Code] [Poster]

2016:

A. Das, R. Panda, A. Roy-Chowdhury; Computer Vision and Image Understanding, 2016.

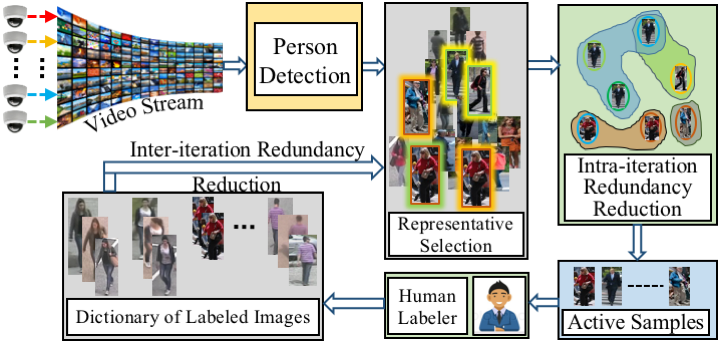

A. Chakraborty, A. Das, A. Roy-Chowdhury; IEEE Trans. on Pattern Analysis and Machine Intelligence, 2016.

N. Martinel, A. Das, C. Micheloni, A. Roy-Chowdhury; European Conference in Computer Vision, 2016.

[Supplementary]

V. Ramanishka, A. Das, D. H. Park, S. Venugopalan, L. A. Hendricks, M. Rohrbach, K. Saenko; ACM Multimedia, 2016 (MSR Video to Language Challenge).

R. Panda, A. Das, A. Roy-Chowdhury; IEEE International Conference on Image Processing, 2016.

[Supplementary]

R. Panda, A. Das, A. Roy-Chowdhury; International Conference on Pattern Recognition, 2016.

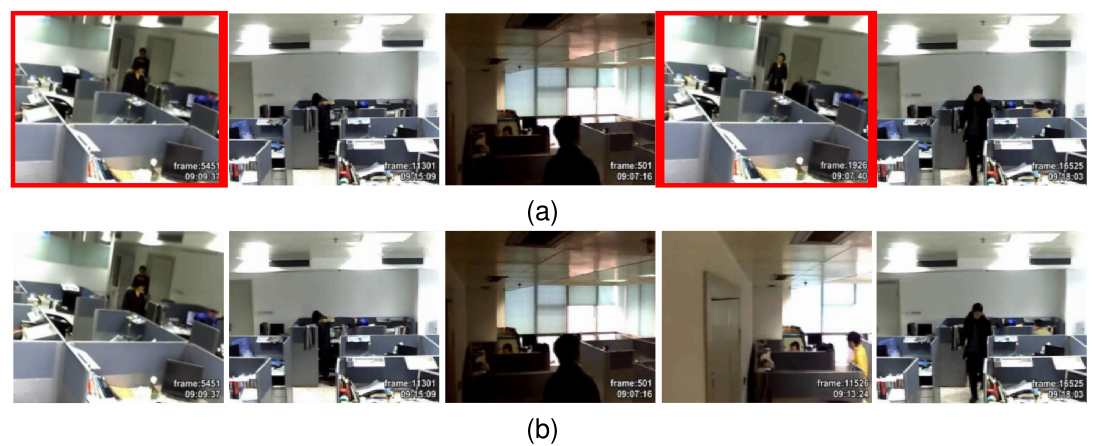

In this paper, the problem of summarizing long videos viewed from multiple cameras is addressed by forming a joint embedding space and solving an eigenvalue problem in that embedding space. The embedding is learned by exploring intra view similarities (i.e., between frames of the same video) and also inter-view similarities (i.e., between frames of different videos looking roughly at the same scene). To preserve both types of similarities, a sparse subspace clustering approach is used with objectives and constraints changed suitably to fit the different needs for the two different scenarios. We get the embedded representation of all the frames from all the videos by an unification of the two types of similarities using block matrices. This leads to solving a standard eigenvalue problem. After getting the embeddings, we apply a similar procedure of sparse representative selection as is done in the above paper to get the joint summary. Experimentations on both multiview and single view datasets show the applicability of this generalized method for a wide application area.

In this paper, the problem of summarizing long videos viewed from multiple cameras is addressed by forming a joint embedding space and solving an eigenvalue problem in that embedding space. The embedding is learned by exploring intra view similarities (i.e., between frames of the same video) and also inter-view similarities (i.e., between frames of different videos looking roughly at the same scene). To preserve both types of similarities, a sparse subspace clustering approach is used with objectives and constraints changed suitably to fit the different needs for the two different scenarios. We get the embedded representation of all the frames from all the videos by an unification of the two types of similarities using block matrices. This leads to solving a standard eigenvalue problem. After getting the embeddings, we apply a similar procedure of sparse representative selection as is done in the above paper to get the joint summary. Experimentations on both multiview and single view datasets show the applicability of this generalized method for a wide application area.

|

2015:

A. Das, R. Panda, A. Roy-Chowdhury; IEEE International Conference on Image Processing, 2015.

A. Das, A. Chakraborty, A. Roy-Chowdhury; European Conference in Computer Vision, 2014.

[Supplementary] [Dataset] [Code] [Bibtex] [Poster] [video spotlight]

A. Das, N. Martinel, C. Micheloni, A. Roy-Chowdhury; IEEE Trans. on Pattern Analysis and Machine Intelligence, 2015.

[Supplementary]